What does it mean to profile ones code? The main idea behind benchmarking or profiling is to figure out how fast your code executes and where the bottlenecks are. The main reason to do this sort of thing is for optimization. You will run into situations where you need your code to run faster because your business needs have changed. When this happens, you will need to figure out which parts of your code are slowing it down.

This article will only cover how to profile your code using a variety of tools. It will not go into actually optimizing your code. Let’s get started!

Using timeit

Python comes with a module called timeit. You can use it to time small code snippets. The timeit module uses platform-specific time functions so that you will get the most accurate timings possible.

The timeit module has a command line interface, but it can also be imported. We will start out by looking at how to use timeit from the command line. Open up a terminal and try the following examples:

python -m timeit -s "[ord(x) for x in 'abcdfghi']" 100000000 loops, best of 3: 0.0115 usec per loop python -m timeit -s "[chr(int(x)) for x in '123456789']" 100000000 loops, best of 3: 0.0119 usec per loop

What’s going on here? Well, when you call Python on the command line and pass it the “-m” option, you are telling it to look up a module and use it as the main program. The “-s” tells the timeit module to run setup once. Then it runs the code for n number of loops 3 times and returns the best average of the 3 runs. For these silly examples, you won’t see much difference.

Your output will likely be slightly different as it is dependent on your computer’s specifications.

Let’s write a silly function and see if you can time it from the command line:

# simple_func.py

def my_function():

try:

1 / 0

except ZeroDivisionError:

pass

All this function does is cause an error that is promptly ignored. Yes, it’s another silly example. To get timeit to run this code on the command line, you will need to import the code into its namespace, so make sure you have changed your current working directory to be in the same folder that this script is in. Then run the following:

python -m timeit "import simple_func; simple_func.my_function()" 1000000 loops, best of 3: 1.77 usec per loop

Here you import the function and then call it. Note that we separate the import and the function call with semi-colons and that the Python code is in quotes. Now we’re ready to learn how to use timeit inside an actual Python script.

Importing timeit for Testing

Using the timeit module inside your code is also pretty easy. You will use the same silly script from before and show you how below:

# simple_func2.py

def my_function():

try:

1 / 0

except ZeroDivisionError:

pass

if __name__ == "__main__":

import timeit

setup = "from __main__ import my_function"

print(timeit.timeit("my_function()", setup=setup))

Here you check to see if the script is being run directly (i.e. not imported). If it is, then you import timeit, create a setup string to import the function into timeit’s namespace and then we call timeit.timeit. You will note that we pass a call to the function in quotes, then the setup string. And that’s really all there is to it! Now let’s learn about how to write our own timer decorator.

Use a decorator

Writing your own timer is a lot of fun too, although it may not be as accurate as just using timeit depending on the use case. Regardless, you’re going to write your own custom function timing decorator!

Here’s the code:

import random

import time

def timerfunc(func):

"""

A timer decorator

"""

def function_timer(*args, **kwargs):

"""

A nested function for timing other functions

"""

start = time.time()

value = func(*args, **kwargs)

end = time.time()

runtime = end - start

msg = "The runtime for {func} took {time} seconds to complete"

print(msg.format(func=func.__name__,

time=runtime))

return value

return function_timer

@timerfunc

def long_runner():

for x in range(5):

sleep_time = random.choice(range(1,5))

time.sleep(sleep_time)

if __name__ == '__main__':

long_runner()

For this example, you import the random and the time modules from Python’s standard library. Then you create our decorator function. You will notice that it accepts a function and has another function inside of it. The nested function will grab the time before calling the passed in function. Then it waits for the function to return and grabs the end time. Now you know how long the function took to run, so you print it out. Of course, the decorator also needs to return the result of the function call and the function itself, so that’s what the last two statements are all about.

The next function is decorated with our timing decorator. You will note that it uses random to “randomly” sleep a few seconds. This is just to demonstrate a long running program. You would actually want to time functions that connect to databases (or run large queries), websites, run threads or do other things that take a while to complete.

Each time you run this code, the result will be slightly different. Give it a try and see for yourself!

Create a Timing Context Manager

Some programmers like to use context managers to time small pieces of code. So let’s create our own timer context manager class!

import random

import time

class MyTimer():

def __init__(self):

self.start = time.time()

def __enter__(self):

return self

def __exit__(self, exc_type, exc_val, exc_tb):

end = time.time()

runtime = end - self.start

msg = 'The function took {time} seconds to complete'

print(msg.format(time=runtime))

def long_runner():

for x in range(5):

sleep_time = random.choice(range(1,5))

time.sleep(sleep_time)

if __name__ == '__main__':

with MyTimer():

long_runner()

In this example, we use the class’s __init__ method to start our timer. The __enter__ method doesn’t need to do anything other then return itself. Lastly, the __exit__ method has all the juicy bits. Here we grab the end time, calculate the total run time and print it out.

The end of the code actually shows an example of using our context manager where we wrap the function from the previous example in our custom context manager.

Using cProfile

Python comes with its own code profilers built-in. There is the profile module and the cProfile module. The profile module is pure Python, but it will add a lot of overhead to anything you profile, so it’s usually recommended that you go with cProfile, which has a similar interface but is much faster.

We’re not going to go into a lot of detail about this module in this article, but let’s look at a couple of fun examples so you get a taste for what it can do.

>>> import cProfile

>>> cProfile.run("[x for x in range(1500)]")

4 function calls in 0.001 seconds

Ordered by: standard name

ncalls tottime percall cumtime percall filename:lineno(function)

1 0.000 0.000 0.000 0.000 <string>:1(<listcomp>)

1 0.000 0.000 0.000 0.000 <string>:1(<module>)

1 0.001 0.001 0.001 0.001 {built-in method builtins.exec}

1 0.000 0.000 0.000 0.000 {method 'disable' of '_lsprof.Profiler' objects}

Let’s break this down a bit. The first line shows that there were 4 function calls. The next line tells us how the results are ordered. According to the documentation, standard name refers to the far right column. There are a number of columns here.

- ncalls is the number of calls made.

- tottime is a total of the time spent in the given function.

- percall refers to the quotient of tottime divided by ncalls

- cumtime is the cumulative time spent in this and all subfunctions. It’s even accurate for recursive functions!

- The second percall column is the quotient of cumtime divided by primitive calls

- filename:lineno(function) provides the respective data of each function

You can call cProfile on the command line in much the same way as we did with the timeit module. The main difference is that you would pass a Python script to it instead of just passing a snippet. Here’s an example call:

python -m cProfile test.py

Give it a try on one of your own modules or try it on one of Python’s modules to see how it works.

line_profiler

There’s a neat 3rd party project called line_profiler that is designed to profile the time each individual line takes to execute. It also includes a script called kernprof for profiling Python applications and scripts using line_profiler. Just use pip to install the package. Here’s how:

pip install line_profiler

To actually use the line_profiler, we will need some code to profile. But first, I need to explain how line_profiler works when you call it on the command line. You will actually be calling line_profiler by calling the kernprof script. I thought that was a bit confusing the first time I used it, but that’s just the way it works.

Here’s the normal way to use it:

kernprof -l silly_functions.py

This will print out the following message when it finishes: Wrote profile results to silly_functions.py.lprof. This is a binary file that you can’t view directly. When you run kernprof though, it will actually inject an instance of LineProfiler into your script’s __builtins__ namespace. The instance will be named profile and is meant to be used as a decorator.

With that in mind, you can actually write your script:

# silly_functions.py

import time

@profile

def fast_function():

print("I'm a fast function!")

@profile

def slow_function():

time.sleep(2)

print("I'm a slow function")

if __name__ == '__main__':

fast_function()

slow_function()

So now you have two decorated functions that are decorated with something that isn’t imported. If you actually try to run this script as is, you will get a NameError because “profile” is not defined. So always remember to remove your decorators after you have profiled your code!

Let’s back up and learn how to actually view the results of our profiler. There are two methods we can use. The first is to use the line_profiler module to read our results file:

python -m line_profiler silly_functions.py.lprof

The alternate method is to just use kernprof in verbose mode by passing is -v:

kernprof -l -v silly_functions.py

Regardless which method you use, you should end up seeing something like the following get printed to your screen:

I'm a fast function!

I'm a slow function

Wrote profile results to silly_functions.py.lprof

Timer unit: 1e-06 s

Total time: 3.4e-05 s

File: silly_functions.py

Function: fast_function at line 3

Line # Hits Time Per Hit % Time Line Contents

==============================================================

3 @profile

4 def fast_function():

5 1 34 34.0 100.0 print("I'm a fast function!")

Total time: 2.001 s

File: silly_functions.py

Function: slow_function at line 7

Line # Hits Time Per Hit % Time Line Contents

==============================================================

7 @profile

8 def slow_function():

9 1 2000942 2000942.0 100.0 time.sleep(2)

10 1 59 59.0 0.0 print("I'm a slow function")

The source code is printed out with the timing information for each line. There are six columns of information here. Let’s find out what each one means.

- Line # – The line number of the code that was profiled

- Hits – The number of times that particular line was executed

- Time – The total amount of time the line took to execute (in the timer’s unit). The timer unit can be seen at the beginning of the output

- Per Hit – The average amount of time that line of code took to execute (in timer units)

- % Time – The percentage of time spent on the line relative to the total amount of time spent in said function

- Line Contents – The actual source code that was executed

If you happen to be an IPython user, then you might want to know that IPython has a magic command (%lprun) that allows you to specify functions to profile and even which statement to execute.

memory_profiler

Another great 3rd party profiling package is memory_profiler. The memory_profiler module can be used for monitoring memory consumption in a process or you can use it for a line-by-line analysis of the memory consumption of your code. Since it’s not included with Python, you’ll have to install it. You can use pip for this:

pip install memory_profiler

Once it’s installed, we need some code to run it against. The memory_profiler actually works in much the same way as line_profiler in that when you run it, memory_profiler will inject an instance of itself into __builtins__ named profile that you are supposed to use as a decorator on the function you are profiling.

Here’s a simple example:

# memo_prof.py

@profile

def mem_func():

lots_of_numbers = list(range(1500))

x = ['letters'] * (5 ** 10)

del lots_of_numbers

return None

if __name__ == '__main__':

mem_func()

In this example, you create a list that contains 1500 integers. Then you create a list with 9765625 (5 to the 10 power) instances of a string. Finally you delete the first list and return. The memory_profiler doesn’t have another script you need to run to do the actual profiling like line_profiler did. Instead you can just run Python and use its -m parameter on the command line to load the module and run it against our script:

python -m memory_profiler memo_prof.py

Filename: memo_prof.py

Line # Mem usage Increment Line Contents

================================================

1 16.672 MiB 0.000 MiB @profile

2 def mem_func():

3 16.707 MiB 0.035 MiB lots_of_numbers = list(range(1500))

4 91.215 MiB 74.508 MiB x = ['letters'] * (5 ** 10)

5 91.215 MiB 0.000 MiB del lots_of_numbers

6 91.215 MiB 0.000 MiB return None

The columns are pretty self-explanatory this time around. We have our line numbers and then the amount of memory used after said line was executed. Next we have an increment field which tells us the difference in memory of the current line versus the line previous. The very last column is for the code itself.

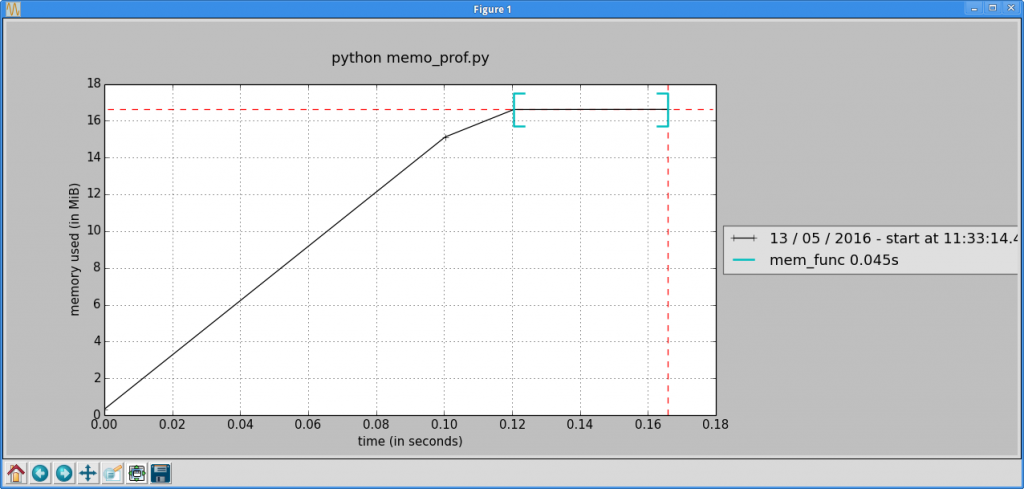

The memory_profiler also includes mprof which can be used to create full memory usage reports over time instead of line-by-line. It’s very easy to use; just take a look:

$ mprof run memo_prof.py mprof: Sampling memory every 0.1s running as a Python program...

mprof can also create a graph that shows you how your application consumed memory over time. To get the graph, all you need to do is:

$ mprof plot

For the silly example we created earlier, I got the following graph:

You should try running it yourself against a much more complex example to see a more interesting plot.

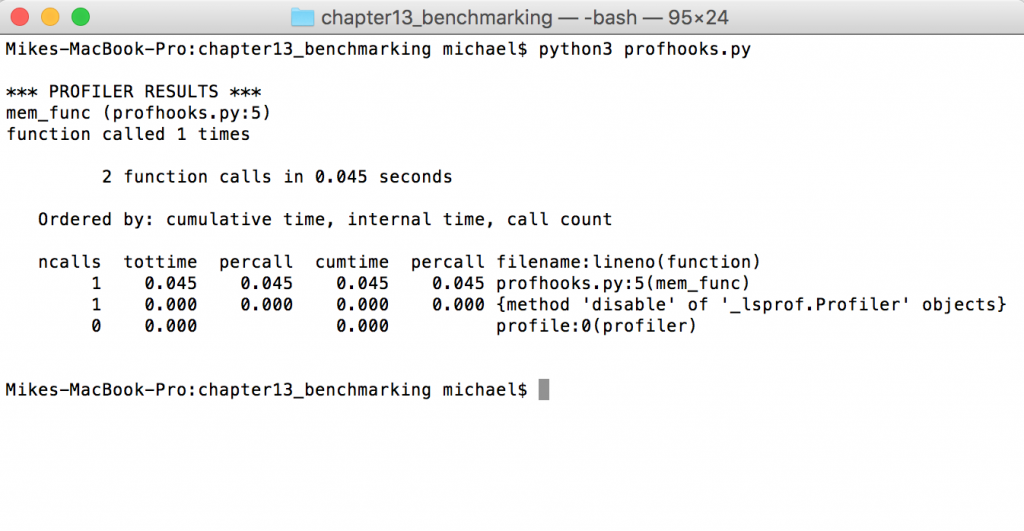

profilehooks

The last 3rd party package that you will look at in this article is called profilehooks. It is a collection of decorators specifically designed for profiling functions. To install profilehooks, just do the following:

pip install profilehooks

Now that we have it installed, let’s re-use the example from the last section and modify it slightly to use profilehooks:

# profhooks.py

from profilehooks import profile

@profile

def mem_func():

lots_of_numbers = list(range(1500))

x = ['letters'] * (5 ** 10)

del lots_of_numbers

return None

if __name__ == '__main__':

mem_func()

All you need to do to use profilehooks is import it and then decorate the function that you want to profile. If you run the code above, you will get output similar to the following sent to stdout:

The output for this package appears to follow that of the cProfile module from Python’s standard library. You can refer to the descriptions of the columns earlier in this chapter to see what these mean. The profilehooks package has two more decorators. The first one we will look at is called timecall. It gives us the course run time of the function:

# profhooks2.py

from profilehooks import timecall

@timecall

def mem_func():

lots_of_numbers = list(range(1500))

x = ['letters'] * (5 ** 10)

del lots_of_numbers

return None

if __name__ == '__main__':

mem_func()

When you run this piece of code, you will see something similar to the following output:

mem_func (c:\path_to_script\profhooks2.py:3): 0.141 seconds

All decorator does is time the execution time of the function but without the overhead of profiling. It’s kind of like using timeit.

The last decorator that profilehooks provides is called coverage. It is supposed to print out the line coverage of a single function. I didn’t really find this one all that useful myself, but you’re welcome to give it a try on your own.

Finally I just want to mention that you can also run profilehooks on the command line using Python’s -m flag:

python -m profilehooks mymodule.py

The profilehooks package is pretty cool and has lots of potential.

Wrapping Up

You covered a lot of information in this article. You learned how to use Python’s built-in modules, timeit and cProfile to time and profile your code, respectively. You also learned how to write your own timing code and use it as a decorator or a context manager. Then we moved on and looked at some 3rd party packages; namely line_profiler, memory_profiler*and profilehooks. At this point, you should be well on your way to benchmarking your own code. Give it a try and see if you can find any bottlenecks of your own.